Six questions to help work out if a quasi-experimental impact evaluation is possible

Over the last fifty years, social scientists have developed some fiendishly clever ways of generating robust estimates of the impact of a policy or intervention without running a randomised controlled trial. However, one of the downsides to how clever quasi-experimental methods are is that they can be somewhat mysterious and seem like they can magically identify an impact as long as someone wants to find it. Sadly, this is not the case. The people who developed the methods also specified a bunch of conditions and assumptions that need to be present to confident that they do provide a good estimate of the intervention’s impact.

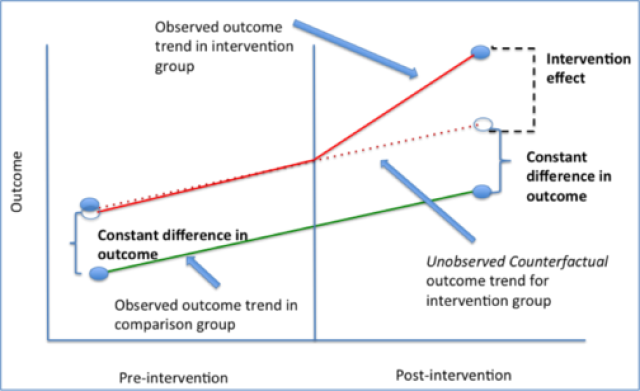

Though there are a range of methods, the archetypal QED approach is difference-in-differences (DiD) analysis. At a simple level this looks at the outcomes in an intervention area and compares them with the outcomes in the non-intervention area. A key characteristic of a DiD approach is that the non-intervention area does not have to look exactly the same as the intervention one, but it does need to be changing in the same way. In the diagram below, outcomes in the intervention area are represented by the red line and those in the comparison area by the green one. The strengths of this model are that it doesn’t just look at outcomes in the intervention area before and after the intervention (as outcomes could have been improving anyway) and doesn’t just compare outcomes in an intervention area and a comparison one (where an apparent impact could be due to pre-existing differences). Thus the design addresses some of the key issues that face impact evaluation using data not generated through an RCT.

The key assumption in the difference-in-differences design is that outcomes in both areas would have continued in parallel without the intervention. This can’t be tested because you can’t see what would have happened in intervention area if you hadn’t run the intervention. But you can test whether the outcomes in the two areas were running in parallel before the intervention was introduced, which is known unsurprisingly as the parallel trends test.

Source: https://www.publichealth.columbia.edu/research/population-health-methods/difference-difference-estimation

The basic set up of a difference-in-differences analysis implies six questions that can help indicate whether a quasi-experimental approach might be feasible:

Is there (or can you gather) quantitative data on the relevant outcome in the relevant area and for the relevant population?

Is there data on the relevant outcome before as well as after the intervention?

Is there data on the relevant outcome over a period within which you’d expect to see change?

Are there or were there non-intervention areas?

Is there data on outcomes for non-intervention areas before as well as after the intervention?

Was there an element of randomness in the way the intervention and non-intervention areas were chosen?

Answering ‘no’ to the first of these questions definitely means a QED approach isn’t possible. If you can answer yes to some or all of the other questions, a QED approach may be possible, though it may not. (As noted above, you could answer yes to all these questions but if the parallel trend test fails, it means a difference-in-difference approach won’t be possible.) If you answer yes to the first question, but no to all the others, then a QED approach almost certainly isn’t possible.

These questions can be supplemented by other questions, such as:

At what level of granularity is the data available?

For what periodicity is the data available (ie daily/weekly monthly)?

The greater granularity and greater periodicity of the data, the more likely it is that a QED design will be possible and the more likely it is that a QED will find an impact if there has been one.

These questions are a fairly rough and ready test, but I think they do provide a good indication about whether a QED evaluation is possible without getting into technical intricacies. As such, they may aid communication between policy makers, intervention developers and commissioners, on the one hand, and evaluators on the other.